Abstract

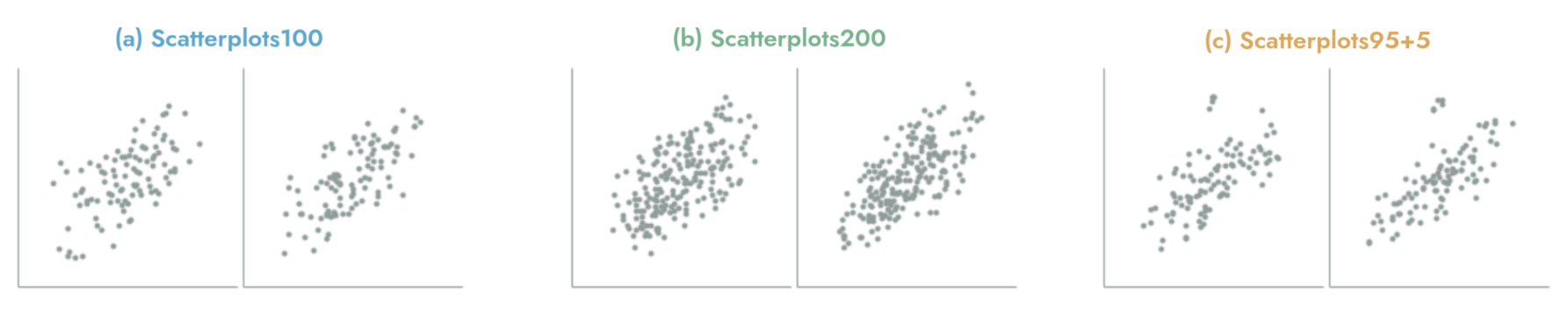

How deep neural networks can aid visualization perception research is a wide-open question. This paper provides insights from three perspectives—prediction, generalization, and interpretation—via training and analyzing deep convolutional neural networks on human correlation judgments in scatterplots across three studies. The first study assesses the accuracy of twenty-nine neural network architectures in predicting human judgments, finding that a subset of the architectures (e.g., VGG-19) has comparable accuracy to the best-performing regression analyses in prior research. The second study shows that the resulting models from the first study display better generalizability than prior models on two other judgment datasets for different scatterplot designs. The third study interprets visual features learned by a convolutional neural network model, providing insights about how the model makes predictions, and identifies potential features that could be investigated in human correlation perception studies. Together, this paper suggests that deep neural networks can serve as a tool for visualization perception researchers in devising potential empirical study designs and hypothesizing about perpetual judgments. The preprint, data, code, and training logs are available at https://doi.org/10.17605/osf.io/exa8m.

BibTeX

@inproceedings{yang2023can,

title={How Can Deep Neural Networks Aid Visualization Perception Research? Three Studies on Correlation Judgments in Scatterplots},

author={Yang, Fumeng and Ma, Yuxin and Harrison, Lane and Tompkin, James and Laidlaw, David H},

booktitle={Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems},

pages={1--17},

year={2023}

}