Fair Ranking Visualization

A visual support for builiding and analyzing fair consensus rankings

In our society, ranking is a common tool used to prioritize among critical choices impacting people’s lives and livelihood, for instance when evaluating people for outcomes like jobs, loans, or educational opportunities. Increasingly for such tasks, the judgments of human analysts are augmented by decision support tools or even fully automated screening procedures which automatically rank candidates. This has prompted significant concern regarding the danger of inadvertently automating unfair treatment of historically disadvantaged groups of people. In addition, there is increasing awareness that unfairness also arises when human analysts make decisions, given we may suffer from implicit bias in our decision making. This interplay of human bias and machine learning now poses additional new challenges regarding fair decision making and with it the design of appropriate bias mitigation technologies.

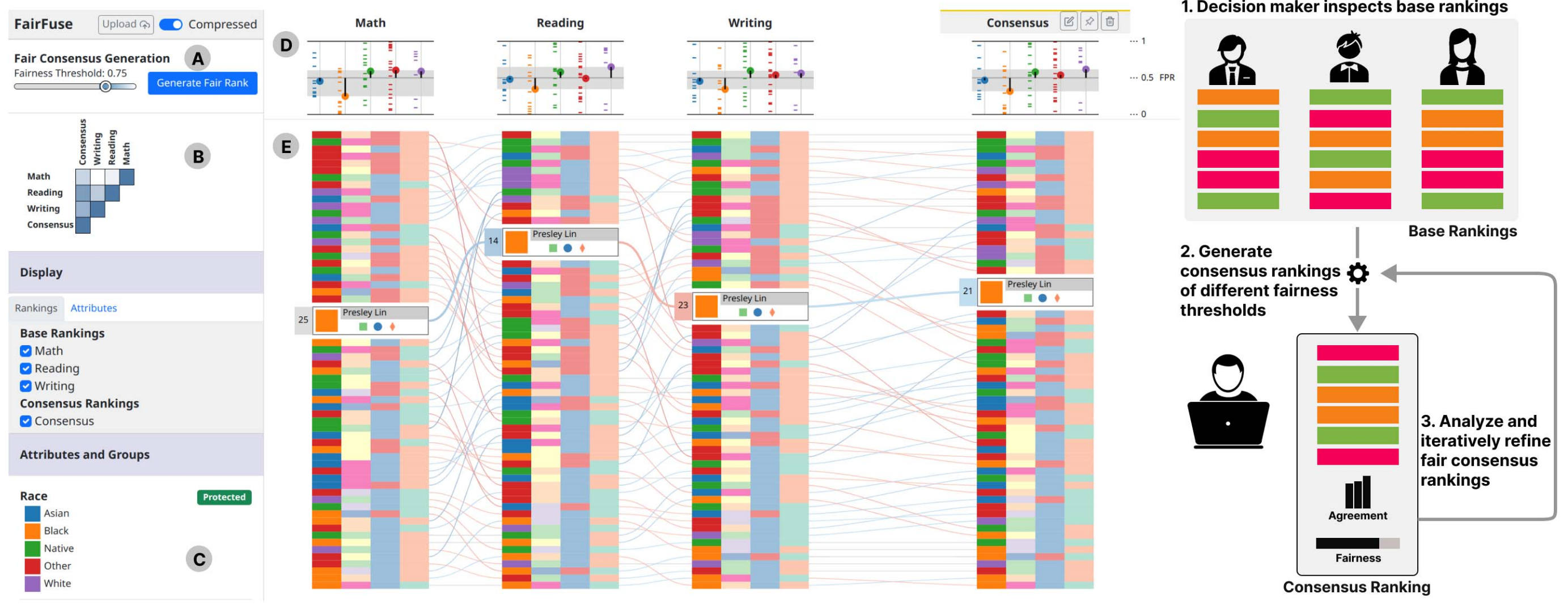

To tackle this important problem, recent research efforts have began to focus on developing automatic methods for measuring and mitigating unfair bias in rankings. To date, however, these efforts all consider each ranking in isolation. One critical yet overlooked problem is ranking by consensus, where numerous decision makers produce rankings over candidate items, and then those rankings are aggregated to create a final consensus ranking.

Therefore, we propose to model the fair rank aggregation problem with formal constraints that capture prevalent group fairness criteria. This new fairness-preserving optimization model ensures measures of fairness for the candidates being ranked while still producing a representative consensus ranking following the base rankings. We will design a family of exact and approximate bias mitigation solutions to this optimization problem that guarantee fair consensus generation in a rich variety of decision scenarios.

Further, we propose to integrate these fair rank aggregation methods into an interactive human-in-the-loop visual analytics system. This AEQUITAS technology will enable decision makers to collaboratively build fair consensus by leveraging visualization-driven interfaces that aim to facilitate understanding and trust in the consensus building process. AEQUITAS will support comparative analytics to visualize the impact of individual rankings on the final consensus outcome, as well as the trade-offs between accuracy of the aggregation and fairness criteria. Finally, we will conduct extensive user studies to understand how well fairness imposed by the AEQUITAS system aligns with human decision makers’ perception of fairness, and the ability of multiple analysts to collaborate effectively using the AEQUITAS technology.